Instantly Solving SEO and Providing SSR for Modern JavaScript Websites Independently of Frontend and Backend Stacks

What is the problem anyway?

Whenever you develop a website with a modern frontend javascript framework such as React.js, Vue.js, Angular.js, etc... sooner or later you have to deal with the painful eternal SEO problem. Since most search engines don't even execute javascript to generate the final DOM that contains most of the valuable page content, your website will definitely be hurt in SEO rankings as search engines see almost nothing of value in your HTML body. Native framework SSR (server-side rendering) and/or developing your website as isomorphic can be the ideal solutions but it needs to be taken care of as early as your first line of code and its complexity grows with your webapp and also becomes instantly invalid with a single non-conformant dependency. Simpler websites (small commercial websites, technical documentation websites, etc..) may just use a static site generation framework such as gatsby.js or Docusaurus to solve this problem. But if you're dealing with a more complex webapp, such frameworks will never be a good choice. Also if you have a big project that's already in production, native framework SSR might be too complex and too late. And that is how SEO became an eternal problem for modern webapps.

However, something happened a year ago, Google announced shipping "headless" Chrome starting from version 59. Along with Chrome Devtools Protocol, this has opened a new world for developers to remotely control Chrome. Headless Chrome is mainly used for automated testing. But most interestingly, headless Chrome became an unlikely complete solution for the eternal SEO problem, a solution that is totally independent of whatever frontend frameworks, stacks, versions, dependencies or backend stacks you might use! Sounds too good to be true, right?

Rendora?

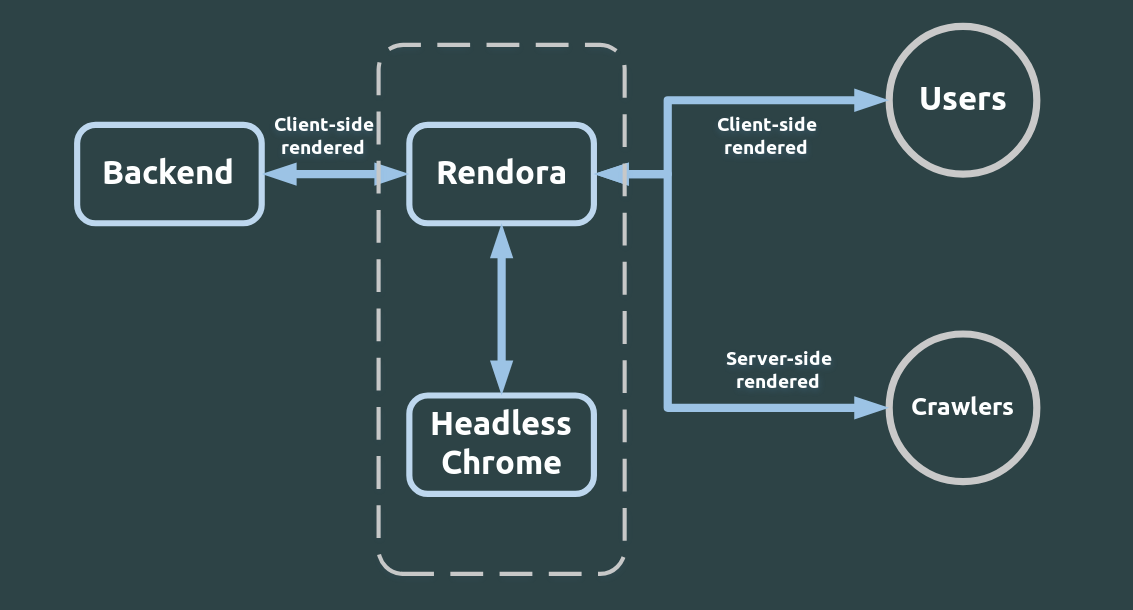

Rendora is a new FOSS golang project that has been trending in GitHub for the past few days and deserves some highlight. Rendora is a dynamic renderer that uses headless Chrome to effortlessly provide SSR to web crawlers and thus improving SEO. Dynamic rendering simply means that the server provides server-side rendered HTML to web crawlers such as GoogleBot and BingBot and at the same time provides the typical initial HTML to normal users in order to be rendered at the client side. Dynamic rendering has been recommended lately by both Google and Bing and also has been talked about in Google I/O' 18.

Rendora works by acting as a reverse HTTP proxy in front of your backend server (e.g. Node.js, Golang, Django, etc...) and checking incoming requests according to the configuration file; if it detects a "whitelisted" request for server-side rendering, it commands headless Chrome to request and render the corresponding page and then return the final SSR'ed HTML response back to the client. If the request is blacklisted, Rendora simply acts as a useless reverse HTTP proxy and returns the response coming from the backend as is. Rendora differs from the other great project in the same area, rendertron, in that not only it offers better performance by using golang instead of Node.js, using caching to store SSR'ed pages and skipping fetching unnecessary assets such as fonts and images which slows down rendering on headless Chrome but also it doesn't require any change in both backend and frontend code at all! Let's see Rendora in action to understand how it works.

Rendora in action

Let's write the simplest React.js application

import * as React from "react"

import * as ReactDOM from "react-dom"

class App extends React.Component {

render() {

return (

<div>

<h1>Hello World!</h1>

</div>

)

}

}

ReactDOM.render(

<App />,

document.getElementById("app")

)

Now let's build it to canonical javascript using webpack and babel. This will produce the final javascript file bundle.js. Then let's write a simple index.html file.

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

</head>

<body>

<div id="app"></div>

<script src="/bundle.js"></script>

</body>

</html>

Now let's serve index.html using any simple HTTP server, I wrote one in golang that's listening to the port 8000. Now whenever you address the server 127.0.0.1:8000 using your browser and view the page source, you will simple see exactly the same as the above HTML code. That's expected since the Hello World header of our React app is generated and added to the DOM after bundle.js gets executed by the browser javascript engine. Now let's put Rendora into use and write a simple config file in YAML

listen:

port: 3001

backend:

url: 127.0.0.1:8000

target:

url: 127.0.0.1:8000

filters:

userAgent:

defaultPolicy: whitelist

What does this config file mean? We told rendora to listen to the port 3001, our backend can be addressed on 127.0.0.1:8000 so that rendora proxies requests to and from it, and that our headless Chrome instance should use it as the target url for whitelisted requests, but since we whitelisted all user agents for the sake of this tutorial, all requests are then valid for server-side rendering. Now let's run headless Chrome and Rendora. I will use Rendora's provided docker images:

docker run --tmpfs /tmp --net=host rendora/chrome-headless

docker run --net=host -v ~/config.yaml:/etc/rendora/config.yaml rendora/rendora

Now comes the big moment, let's try to address our server again but through rendora this time using the address 127.0.0.1:3001. If we check the page source this time, it will be:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

</head>

<body>

<div id="app"><div><h1>Hello World!</h1></div></div>

<script src="/bundle.js"></script>

</body>

</html>

did you see the difference? the content inside the <div id="app"></div> is now part of the HTML sent by the server. It's that easy! whether you use React, Vue, Angular, Preact with whatever versions and dependencies, and also no matter what your backend stack is (e.g. Node.js, Golang, Django, etc...), whether you have a very complex website with complex components or just a "Hello World" app, writing that YAML configuration file is all what you need to provide SSR to search engines. I's worth mentioning that you normally don't want to whitelist all requests, you just want to whitelist certain user agent keywords corresponding to web crawlers (e.g. googlebot, bingbot, etc...) while keeping the default policy as blacklist.

Rendora also provides Prometheus metrics so that you can get a histogram of the SSR latencies and other important counters such as the total number of requests, total number of SSR'ed requests and total number of cached SSR'ed requests.

Are you required to use Rendora as reverse HTTP proxy in front of your backend server in order to get it working? The answer is fortunately NO! Rendora provides another optional HTTP API server listening to the port 9242 by default to provide a rendering endpoint. So you may implement your own filtering logic and just ask Rendora to get you the SSR'ed page. Let's try it and ask Rendora to render the above page again but using the API rendering endpoint with curl this time:

curl --header "Content-Type: application/json" --data '{"uri": "/"}' -X POST 127.0.0.1:9242/render

you simply get a JSON response

{

"status":200,

"content":"<!DOCTYPE html><html lang=\"en\"><head>\n <meta charset=\"UTF-8\">\n</head>\n\n<body>\n <div id=\"app\"><div><h1>Hello World!</h1></div></div>\n <script src=\"/bundle.js\"></script>\n\n\n</body></html>",

"headers":{"Content-Length":"173","Content-Type":"text/html; charset=utf-8","Date":"Sun, 16 Dec 2018 20:28:23 GMT"},

"latency":15.307418

}

You may have noticed the latency to render this "Hello World" React app took only around 15ms on my very busy and old machine without using caching! So Headless Chrome and Rendora are really that fast.

Other uses

While rendora is mainly meant to be used for server-side rendering or SSR, you can easily use its API for scraping websites whose DOM is mostly generated by javascript.